AI: The Force Multiplier

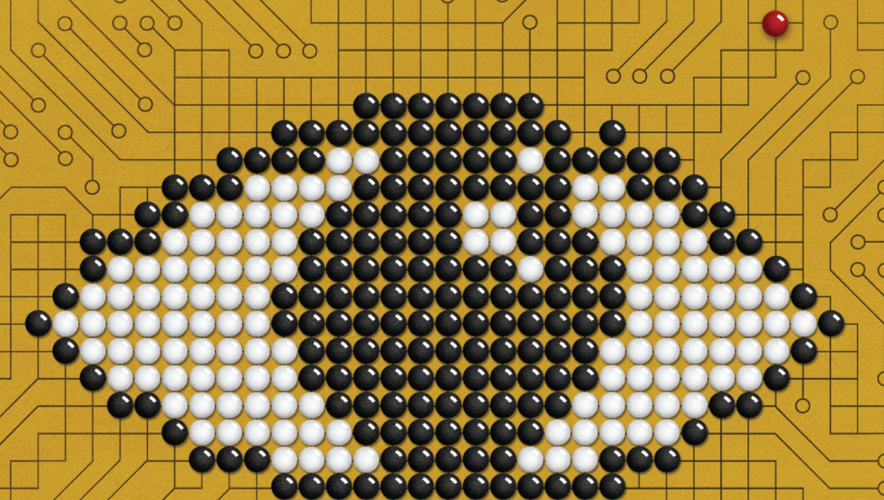

Go is not just a game. It can also serve as an analogy for life, a method of mediation, an exercise in abstract reasoning, or even as insight into a player’s personality. The ancient board game from China is played by two players on a 19-by-19 gridded wooden board with black and white stones. The stones are used to surround other stones to capture them or to mark territory, with 10 to the power of 170 possible board configurations.

“There is no simple procedure to turn a clear lead into a victory—only continued good play,” according to the American Go Association. “The game rewards patience and balance over aggression and greed; the balance of influence and territory may shift many times in the course of a game, and a strong player must be prepared to be flexible but resolute.”

A typical game on a normal board can take 45 minutes to an hour to complete, but professionals can make games last for hours. Supercomputers are not even capable of predicting all the moves that could be made in a game.

This is why when Google’s Deep Mind artificial intelligence (AI) AlphaGo beat one of the best players of the past decade, it was an exciting moment for the future of technology. AlphaGo bested Lee Sedol, winner of 18 world titles, in four out of five games in a 2016 tournament.

“During the games, AlphaGo played a handful of highly inventive winning moves, several of which—including move 37 in game two—were so surprising they overturned hundreds of years of received wisdom, and have since been examined extensively by players of all levels,” Deep Mind said in a press release.

And then, AlphaGo won again in May 2017, marking the AI’s final match event. “The research team behind AlphaGo will now throw their energy into the next set of grand challenges, developing advanced general algorithms that could one day help scientists as they tackle some of our most complex problems, such as finding new cures for diseases, dramatically reducing energy consumption, or inventing revolutionary new materials,” Deep Mind said in a press release. “If AI systems prove they are able to unearth significant new knowledge and strategies in these domains too, the breakthroughs could be truly remarkable. We can’t wait to see what comes next.”

Neither can the rest of the world. The AI market is projected to reach $70 billion by 2020 and will impact consumers, enterprises, and governments, according to The Future of AI is Here, a PricewaterhouseCoopers (PwC) initiative.

“Some tech optimists believe AI could create a world where human abilities are amplified as machines help mankind process, analyze, and evaluate the abundance of data that creates today’s world, allowing humans to spend more time engaged in high-level thinking, creativity, and decision-making,” PwC said in a recent report, How AI is pushing man and machine closer together.

And this is where cybersecurity professionals and experts have shown the most interest in AI—in its ability to create a workforce of the future where AI works to amplify the human workforce, freeing it up to look at the bigger picture and handle problems that machines are not yet capable of.

“The goal of AI in cybersecurity is to make people more efficient, to be a force multiplier,” says Ely Kahn, cofounder and vice president of business development for threat hunting platform Sqrrl. “There’s a huge labor shortage in the cybersecurity industry. I think AI has the ability to help with that by making the existing cybersecurity analysts more productive.”

The basics. AI is defined as the development of computer systems to perform tasks that typically require human intelligence. The term was first used in a 1955 proposal for a Dartmouth summer research project on AI by J. McCarthy of Dartmouth, M. L. Minsky of Harvard, N. Rochester of IBM, and C.E. Shannon of Bell Telephone Laboratories.

The authors requested a two-month, 10-man study of AI to attempt to find out “how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves,” according to the proposal.

Since then, AI has advanced, and there are now many broad areas that fit under the overall umbrella of AI, including deep learning, cognitive computing, data science, and machine learning, says Anand Rao, partner at PwC and global artificial intelligence lead.

Machine learning is one of the largest areas getting attention right now, Rao says. Machine learning is what its name describes—the science and engineering of making machines learn, according to PwC.

This is done by feeding a machine large amounts of data, then having it learn an algorithm to figure out what is considered normal and abnormal behavior.

“In machine learning, the idea is you don’t know exactly what the rules are, so you can’t write a program,” Rao explains. “Usually we get an input, we write specific instructions that produce an output; we can do that if we know what it is that we are trying to do. But when we don’t know that, it becomes hard.”

This is where the two subcategories of machine learning come into play: supervised and unsupervised learning.

Unsupervised machine learning uses data to train the system to create algorithms and the machine is continuously learning, says Kahn, who is the former director of cybersecurity for the White House’s national security staff. Unsupervised machine learning algorithms are “continuously resetting, so they are learning what’s normal inside an organization and what’s abnormal inside the organization, and continuously learning based on the new data that’s fed into it,” he explains.

With supervised learning, humans train the system using training or labeled data to teach the system the algorithm to look for to identify certain types of patterns or anomalies. However, the two types of learning can be used in combination—they do not need to be kept separate.

For instance, supervised machine learning can be used to allow analysts to provide feedback for algorithms the system is using, “so if analysts see something that our unsupervised machine learning algorithms detect that is a false positive or a true positive, the analysts can flag it as such,” Kahn says. “That feedback is fed into our algorithms to power our supervised machine learning loop…you can think of it as two complementary loops reinforcing each other.”

Deep learning. One of the main fears that many people have about the increasing role AI will play in society is that it will replace jobs that humans now hold. While that might be the case for some positions, such as receptionists or customer service jobs, experts are skeptical that AI can replace humans in cybersecurity roles.

To make the kind of decisions cybersecurity analysts make, machines would need to use deep learning—a subcategory within supervised machine learning that powers Google’s Deep Mind products and IBM’s Watson. It uses neural network techniques that are designed to mimic the way the human brain works.

“I talked about supervised machine learning in the sense of using training data, to help educate algorithms about the different types of patterns they should look like,” Kahn says. “Deep learning is that on steroids, in that you’re typically taking huge amounts of training data and passing them through neural network algorithms to look for patterns that a simpler supervised machine learning algorithm would never be able to pick up on.”

The problem with deep learning, however, is that it requires vast amounts of training data to run through the neural network algorithms.

“Google, as you can imagine, has massive amounts of training data for that, so it can feed that training data at huge scale into these neural networks to power those deep learning algorithms,” Kahn says. “In cybersecurity, we don’t quite have that benefit. It’s why deep learning algorithms have been a little bit slower in terms of adoption. There are not pools of labeled cybersecurity data that can be used to power deep learning algorithms.”

For cybersecurity, ideally, there would be a huge inventory of labeled cybersecurity incidents that could be used to create deep learning algorithms; the inventory would have information about how a site was compromised and what exploit was used.

“In today’s environment, there is no massive clearinghouse of that information,” Kahn adds. “Companies generally don’t want to share that information with each other; it’s sensitive.”

This is holding back the cybersecurity industry in terms of taking the next step with AI, and Kahn says he doesn’t see companies’ unwillingness to share data changing any time soon.

“It’s going to be very hard—less from the technical reasons and more from the policy and legal reasons,” he says. “I don’t know if we’ll ever get to a point where companies are willing to share that level of detail with each other to power those types of deep learning algorithms.”

However, big companies who have vast amounts of data may be able to take advantage of deep learning in the future, Kahn says.

AI today. Numerous cybersecurity products are available today that market themselves as an AI product, or one that uses machine learning. These products tend to be used to understand patterns of threat actors and then look for abnormal behavior within the end users’ system, Rao says.

For instance, a product could be used to look at denial of service attacks, “how that happens, the frequency at which they are coming, and then developing patterns that you can start observing over a period of time,” he explains.

These patterns can help companies identify who is trying to infiltrate their systems because the behavior of hobbyist hackers, organized hacking groups, and nation-states differs.

“Once you start profiling, you start looking at how to prevent certain types of attacks from happening,” according to Rao. “Based on the types of profiling, you have various types of intervention.”

This blending of machines—using AI to identify patterns and humans to make decisions based on those identified patterns—is how AI will change the future of cybersecurity and help bolster the workforce, Kahn says.

“Optimally, we start seeing a very close blending of man and machine in that we’re reliant on relatively simple algorithms to detect anomalies. Those algorithms are advancing and getting more sophisticated using AI-type technology to reduce false positives and increase true positives,” he explains. “So, analysts are spending more time on the things that matter, as opposed to chasing dead ends.”