Masterful Manipulation: Deepfakes and Social Engineering

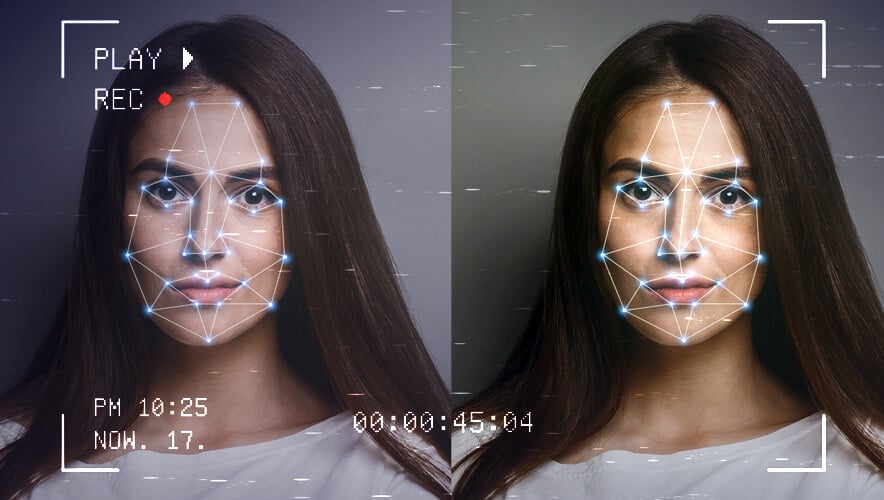

Security professionals are familiar with attacks against people, places, assets, and information, but what about attacks on your very senses? What do you do when you can’t believe the evidence of your own eyes and ears?

In Thursday’s Game Changer session at GSX+, “Disinformation and Deepfake Media: How Critical is the Rise and Spread?,” hacker and Snyk Application Security Advocate Alyssa Miller explains how bad actors could leverage increasingly accessible deep learning neural networks to manipulate media—and potentially impact organizations’ reputations and futures.

Deepfake photos, videos, and audio have been on the scene for several years now, and have primarily been used to swap a celebrity’s face onto another person’s body or to alter what an individual said on video for entertaining, mischievous, or malicious purposes.

Previously, producing an accurate and believable deepfake video took abundant resources—weeks of study, a robust pool of sample video to imitate, and heavy computing power. However, technology is advancing at such a breakneck pace, Miller says, that the time to develop a passable deepfake video is changing from weeks to minutes. Algorithms are becoming faster and more accurate, using less computing power by leveraging shorthand measures to approximate the target image instead of replicating it exactly. This makes deepfake technology more attainable to the average consumer and puts it in ready reach of potential attackers.

Consider, for example, when the CEO of a UK-based energy firm transferred more than €220,000 ($243,000) to a fraudster after criminals used artificial intelligence-based software to impersonate the voice of the chief executive of the energy firm’s German parent company. While strong controls and security education should prevent most of these attacks from succeeding, Miller says, it demonstrates that deepfake audio or video can become just another tool in an attacker’s social engineering arsenal.

Deepfake videos could be used to extort high-ranking figures or to manipulate share prices, Miller adds. For example, if manipulated videos of Amazon CEO Jeff Bezos were released online, “Would it really matter if they were real or not? Once public opinion has been shifted or it has impacted the bottom line of Amazon, does it really matter?” she asks.

This form of “outsider trading,” Miller explains, could heavily affect public perception, which—even if the video is immediately denounced as fake or intentional disinformation—can negatively affect an organization’s entire future.

“Combating disinformation about your organization should be a part of your incident response plan anyway,” Miller says. Even beyond deepfakes, this includes understanding “just the idea of what does disinformation mean to your organization and having a plan of how you’re going to combat that.”

Part of that plan should include proactively monitoring social media for threats, developing a security awareness campaign on signs of deepfake video or media, and cultivating positive relationships with media outlets that can help disseminate accurate information during a crisis.

Want to learn more about deepfake technology and what it means to your organization? Check out the full session, including a Q&A with Keyaan Williams, CEO at CLASS-LLC, on Thursday, 24 September, from 10:15 a.m. to 11:05 a.m. EDT.